Introduction to Coding

Prerequisites Required: None

To write a computer program, also called “coding”, is to convey a set of instructions that a machine is to carry out. Nothing more. Nothing less. Just as you can write a list of instructions for your friend to follow, so too can you write instructions for a machine. Early computer engineers recognized that full English is an awful language for communicating precise instructions. Consider the following sentence:

"Please do the dishes and make dinner."

Which action should be performed first? The sentence might imply that the dishes should be done first, but if you make dinner first and then do the dishes, the instruction has still been followed.

Early computer engineers also recognized that yelling English (or any spoken language really) at electronics didn't cause them to do much.

The Evolution of Coding

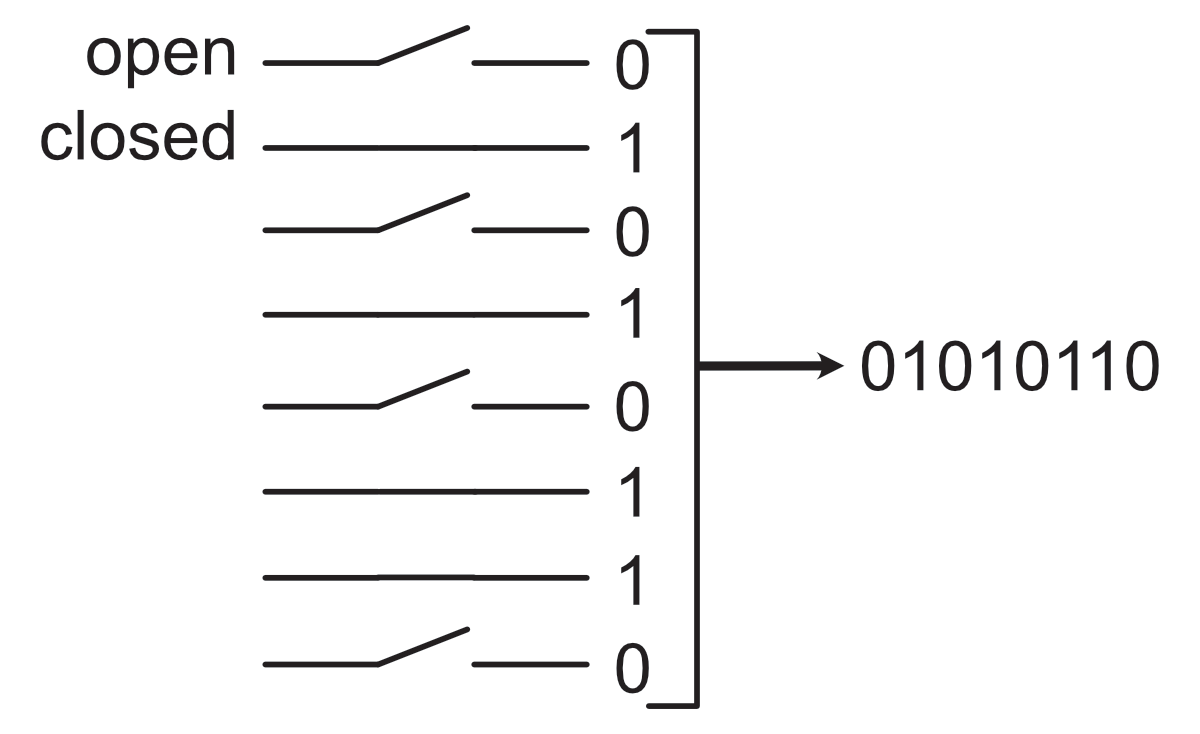

The electronic switch, which turns a voltage on or off, was found to be a simple and effective means of communicating information in electronic circuits. From this means of communication, early computer engineers invented binary. Binary only has two characters, $0$, and $1$, which are assigned to "off" and "on" respectively. A table of this assignment is shown below:

| Switch State | Binary Value |

|---|---|

| ON | 1 |

| OFF | 0 |

Table 1: Mapping of switch states to binary.

Using binary, we can literally describe the state of a set of switches.

Binary characters are combined to form well defined and clear instructions to a set of switches in a computer. For example, the instruction for "do-nothing" on an intel 8080 processor (released in 1974) is $00000000$.

$11111111$ is the instruction that causes the intel 8080 to take a break from the current instructions and execute a different section of instructions, eventually to return.

$01110110$ is the instruction that causes the intel 8080 to halt.

You can imagine how long even a small and simple set of instructions might be:

01111000 01000111 10000000 01010011

10000010 01000001 00000000 11111111

01010011 10000010 01000001 01010011

10000010 01000001 01010011 10000010

01000001 01110110 Intel 8080 Binary Instructions Example

The above instructions move a few numbers around and do some addition; not particularly exciting. Computer engineers recognized that instructions like this were really long to write and developed a shorthand called hexadecimal. While the characters of binary are $0$ or $1$, the characters of hexadecimal are $0$, $1$, $2$, $3$, $4$, $5$, $6$, $7$, $8$, $9$, $A$, $B$, $C$, $D$, $E$, $F$.

The command $00000000$ is $00$ in hexadecimal, $11111111$ is $FF$, and $01110110$ is $76$. After converting the Intel 8080 binary example to hexadecimal, the Intel 8080 example program is:

78 47 80 53 82 41 00 FF 53 82 41 53 82 41 53 82 41 76Intel 8080 Hexadecimal Instructions Example

The hexadecimal form of a command is much more compact and lends itself better to human communication. Remember though, it's still a shorthand. Hexadecimal commands must be converted back into binary in order to command the switches in the computer.

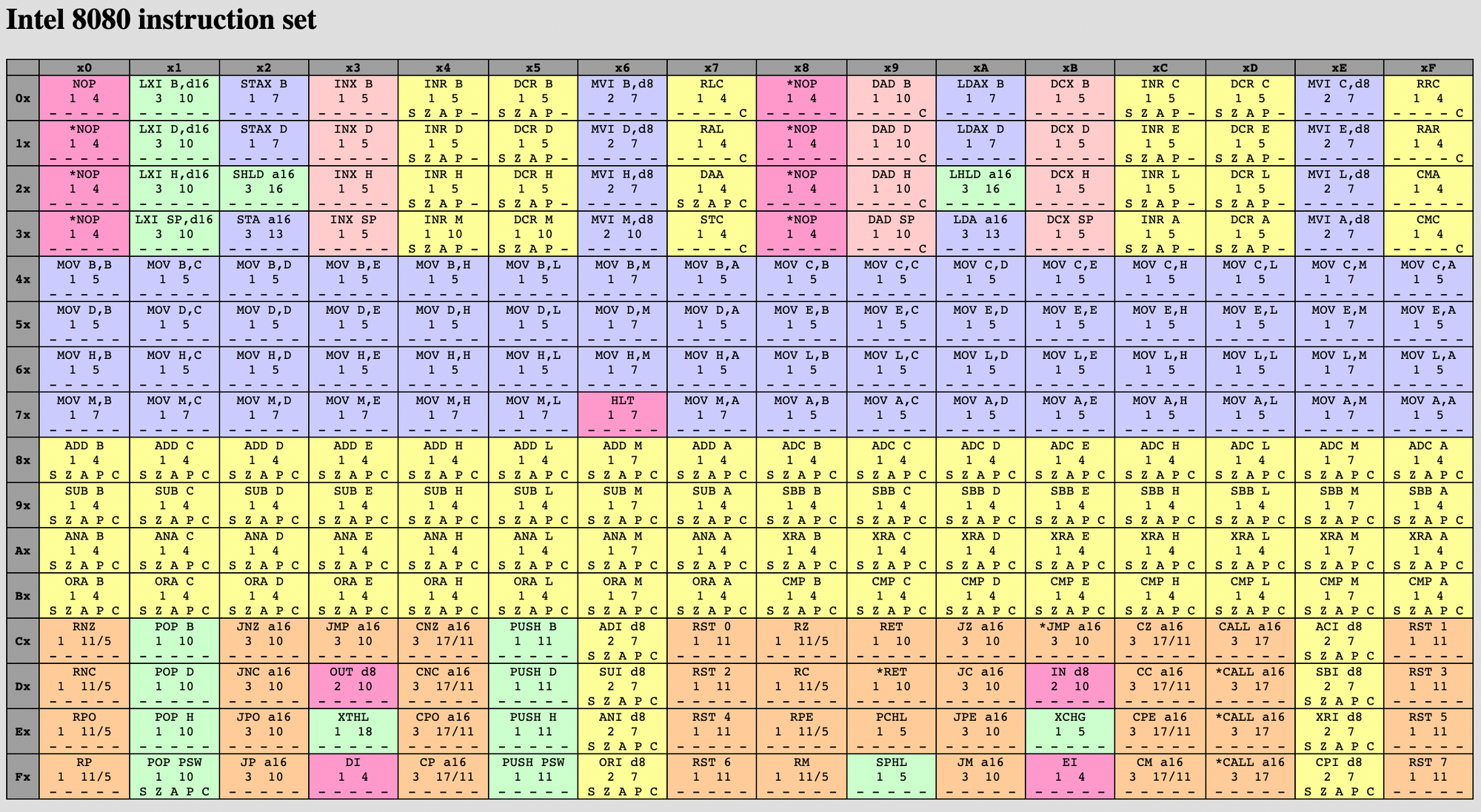

Below is the scary chart that cryptically lists all of the instructions for the Intel 8080 computer. I don't want you to obtain an understanding of this chart or the 8080 computer's instructions. This computer is 50 years old and not commonly used today. Modern computers can perform 200,000 times more calculations in a second than this one. Compared to modern computers however, the intel 8080 is much simpler; all of the instructions can be summarized on a table. Modern Intel computers have thousands of possible instructions.

The required understanding of this chart is to simply acknowledge that the chart exists and that a specific set of instructions are mapped to specific hexadecimal numbers which have a binary representation used to control the computer. The chart is available at Intel 8080 Instruction Set Chart and is also shown in figure 2.

Along the left hand side is the first hexadecimal character in the command and along the top side is the second hexadecimal character in the command. The command of interest is found where the two characters intersect (left first, then top). Observe that 7 on the left and 6 on the top intersect to the "HLT", or halt command, $76$, or $01110110$ that we've already discussed.

At this point, computer engineers have solved both the challenge of communicating with the computer (by using switches and binary) and the problem of instructions being vague (by designing a specific set of instructions with an exacting behavior) but in the process have introduced a new problem. Humans have a really hard time reading binary and hexadecimal!

To read either of these two programs takes a lot of referencing back and forth between the code and the computer instruction manual.

01111000 01000111 10000000 01010011

10000010 01000001 00000000 11111111

01010011 10000010 01000001 01010011

10000010 01000001 01010011 10000010

01000001 01110110 Intel 8080 Binary Instructions Example

78 47 80 53 82 41 00 FF 53 82 41 53 82 41 53 82 41 76Intel 8080 Hexadecimal Instructions Example

To alleviate the difficulty of reading binary or hexadecimal instructions, computer engineers invented the assembly language, which uses more conventional words and phrases to represent specific instructions from the instruction set.

MOV A,L

ADD M

SHLD RAND

POP H

RET

CLS:

MVI C,1FH

CALL 0F809HSmall Sample of Intel 8080 Assembly Code

Even without knowing assembly, I think you will agree that while this language is difficult to read it's a lot easier to read than the raw binary or hexadecimal.

Observe from the Intel 8080 instruction set chart that the first line of the assembly code sample, "MOV A,L" corresponds to the hexadecimal $7D$ which corresponds to the binary input $01111101$. You don't need to try and understand what this snippet of assembly code does, only recognize that this code maps directly to the primary instruction set for the computer.

In order to translate between the more human readable assembly language and the binary program, computer engineers wrote computer programs called assemblers. These programs take in the assembly code and spit out binary and/or hexadecimal:

It's really important to recognize that because the assembly code maps directly to the computer's instruction set, every unique instruction set has its own assembly language!

American computer manufacturers realized pretty quickly that these instruction sets, assembly languages, assemblers, and electrical designs take a lot of expertise to develop but are pretty easy to copy. Because their primary business is selling computers, these manufacturers fiercely defend their patentable computer designs and copyrighted assembly languages.

New manufacturers either pay royalties (if such arrangements are even available) or invent brand new instructions sets and assembly languages. It became a large burden for programmers to support multiple processors, literally having to rewrite their programs for every computer (even computers from the same company often came with different assembly languages as computers got more powerful).

General Purpose Languages

In 1957, amidst a storm of computer architectures and assembly languages John Backus lead a team to develop the first mainstream 'general purpose' programming language, FORTRAN. The goal of FORTRAN was to create a more human intelligible programming language that was computer agnostic. Any programmer familiar with FORTRAN could write and run it on any computer. This was a holy grail of computing.

To accomplish this feat, a program called a compiler was written that would convert FORTRAN code to assembly which would then get assembled with the computer's assembler into binary code to run on the computer.

With this new paradigm, computer manufacturers could focus on making hardware and a proprietary assembly language, FORTRAN developers could make compilers to convert FORTRAN to assembly language, and FORTRAN programmers could focus on their universal FORTRAN code without learning any assembly.

There isn't anything particularly special about FORTRAN itself, but it's the first example of stacking languages on top of each other to unify differences in underlying computers.

It didn't take long to recognize if you can stack a language once, you can stack it again.

The fundamental language stacking principle is what brings us the rich breadth of high level programming languages we have today like Python, C, C++, Go, Java, Javascript, Kotlin, Julia, PHP, C#, Swift, R, Ruby, Matlab, TypeScript, Scala, SQL, HTML, CSS, NoSQL, Rust, Perl, Dart, Basic, OpenCL, CUDA, FORTRAN, Objective-C, Gcode, and so much more.

We also need to acknowledge that a language might not need to be strictly text based in order to be provide value. Labview, Microsoft Excel, Blockly, Simulink, Ladder, are all visual programming languages that are built on layers and layers of lower level language to provide really sophisticated functionality for the programmer without them having to understand the lower level languages under the hood or write a lot of text to code.

The typical software engineer learns a small number of languages and becomes a subject matter expert in those languages. Mechatronics engineers aren't so lucky.

In addition to needing to know fundamental mechanical and electrical engineering, mechatronics engineers must be familiar enough with core principles of software engineering to pick up and start working in any high level language with very little notice. This does NOT mean that the mechatronics engineer will ever be as skilled or proficient as a master software engineer. Instead, a mechatronics engineer just needs be capable of understanding, debugging, and programming in any language with some level of proficiency (as opposed to being an expert in one or two languages).

While I have received formal training in C++ and Matlab, I have had to read, program, and debug all of the following languages: Python, C, C++, C#, Matlab, Swift, R, HTML, CSS, Basic, OpenCL, Objective-C, Dart, Gcode, Labview, Excel, and Simulink. The most valuable part of my formal training was less being able to specifically program in certain languages but instead that I was able to build a solid foundation for quickly becoming productive in any language. I recommend you do the same.

The Future of Coding

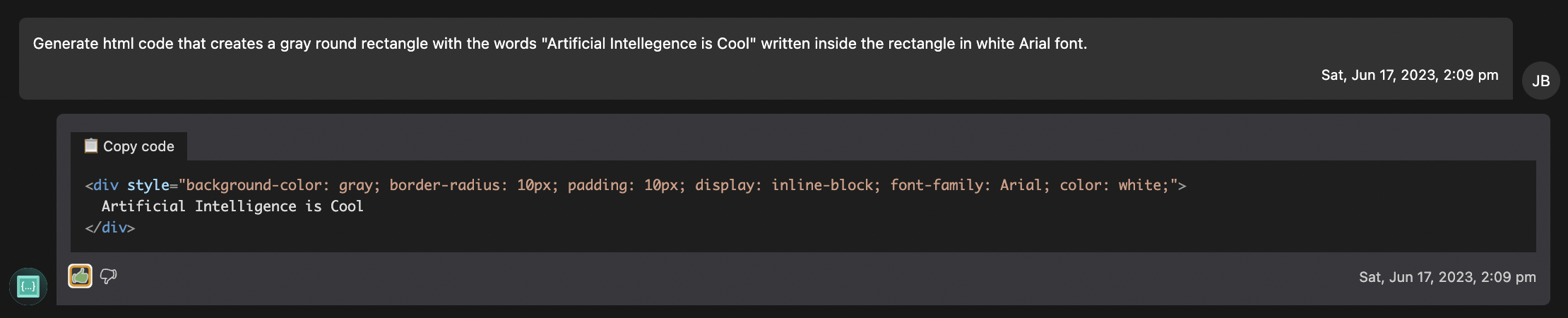

You've likely heard of generative artificial intelligence products like ChatGPT, Github Copilot, Codeium that promise to generate code for you in any programming language. These tools turn the entire 'electronics don't understand English' problem on its head. Below is an example request I gave to Codeium to generate a rectangle in HTML and fill it with the words "Artificial Intelligence is Cool."

Below, I've copied and pasted the code given to me by Codeium into an HTML block.

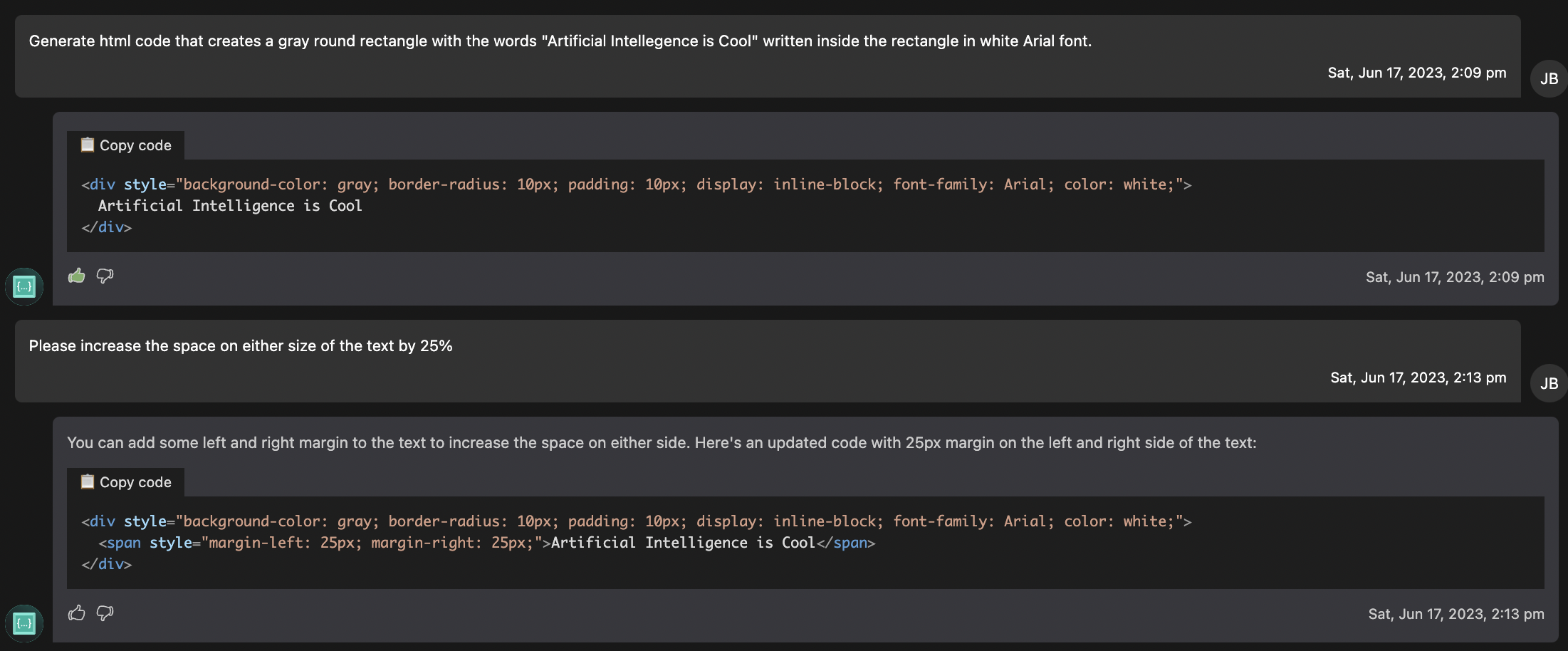

Astounding. I wanted more blank space around the words and I didn't really see an easy way to add that in the provided code. I asked Codeium to help add some margin space around the words.

Below, I've copied and pasted the code given me by Codeium into an HTML block.

Not exactly what I asked for, but definitely in the right direction! This technology is still at a very early state, and sometimes the code provided by these tools doesn't work correctly (unlike compilers and assemblers, these tools use statistical models to estimate the high level language code that will fulfill the needs of the user). Though not perfect, when these tools work, it's like magic (almost as good as being able to run a program on any computer).

As mechatronics engineers who understand the fundamentals of programming but aren't experts in any one language, these tools will be game changing, allowing us to program in any language without having to learn and remember each and every little niche of a programming language to be productive.

Final Thoughts

The intention of this article was to introduce you to how coding has evolved to its current state and give you a jumping off point; not for any specific language but for coding in general.

It can be REALLY tempting to dig deeper and deeper though all the layers that make up a computing ecosystem. Computers are amazing. However, computers are not normally the final work product of a mechatronics engineer; they are tool. Coding is a means to get a computer to apply itself in our projects (rather than being the project in and of itself). Over the past 75+ years, software and computer engineers have really done an outstanding job at making high level languages easy, accessible, and reliable.

Mechatronics engineers typically obtain a light understanding of the lower level layers of computing (only digging into the details when absolutely needed) and focus on using high level programming languages to build projects.